Discover the top LLM observability tools to ensure your AI systems are accurate, cost-efficient, and compliant while minimizing risks like hallucinations.

Ka Ling Wu

Co-Founder & CEO, Upsolve AI

Nov 14, 2025

10 min

Large language models are now widely used in production systems such as chatbots, internal copilots, analytics tools, and automated workflows.

As LLM usage scales, new challenges appear. Model outputs can drift over time, hallucinations can introduce incorrect or risky responses, costs can grow unpredictably, and compliance becomes harder to manage. Without proper visibility, teams often discover these issues only after they impact users, budgets, or trust.

LLM observability addresses this problem by giving teams continuous insight into how models behave in real-world usage. Instead of treating LLMs as black boxes, observability tools help monitor performance, detect anomalies, control costs, and ensure outputs remain reliable and compliant.

In this guide, we explain what LLM observability is, why it matters, and review the best LLM observability tools to help you choose the right solution for your AI stack.

TL;DR: The 5 Best LLM Observability & Monitoring Tools |

|---|

Here’s a quick overview of the best observability solutions (deep dive later)

|

What is LLM Observability & How Does It Work?

LLM observability is the practice of monitoring and analyzing how large language models behave in real-world production environments.

It gives teams visibility into model inputs, outputs, and system performance so they can ensure AI systems remain accurate, reliable, and compliant as usage scales. Instead of treating LLMs as black boxes, observability tools expose how models respond to different prompts, how performance changes over time, and where failures or risks begin to appear.

At a practical level, LLM observability tools collect data from every interaction across the AI stack. This includes prompts and responses, latency, token usage, error rates, and signals related to drift, hallucinations, or bias. Teams analyze these signals to detect issues early, refine prompts or model configurations, and prevent repeated failures in production.

By continuously monitoring model behavior and system metrics, organizations can maintain consistent output quality, control costs, and reduce the risk of incorrect or unsafe responses reaching end users.

Core Elements of LLM Observability & Monitoring Tools

Latency – Tracks how fast your model responds. Slow responses could frustrate users or signal infrastructure problems.

Cost – Monitors how much your AI operations are costing and helps optimize cloud usage.

Accuracy – Checks if the AI is providing correct and useful answers.

Hallucinations – Detects when the AI makes up false or misleading information.

Safety & Compliance – Makes sure your outputs meet ethical and regulatory requirements, especially in sensitive industries like finance and healthcare.

Monitoring these metrics helps you catch problems early, adjust settings, and keep your AI working safely and efficiently.

What to Look for in LLM Observability & Monitoring Tools?

Choosing the right LLM Observability Tools can make all the difference.

The best tools should provide:

Real-time insights to monitor queries and responses instantly, helping you catch issues before they escalate.

Cost optimization features that track usage patterns and suggest ways to reduce unnecessary cloud expenses.

Accuracy tracking that ensures your AI delivers reliable results, improving user trust and satisfaction.

Risk detection capabilities to identify hallucinations and bias early, preventing misinformation and fairness issues.

Compliance and safety tools that help align AI systems with regulatory requirements and ethical standards.

Scalability that supports the growth of your AI systems without adding unnecessary complexity.

With these key features, you can confidently deploy LLMs that are powerful, efficient, and secure while staying aligned with your goals.

How Guac Reduced Costs by 60% and Improved LLM Monitoring with Upsolve See how Guac, a leading AI-driven platform, replaced outdated tools, cut operational costs, and scaled their LLM observability for faster insights. Read the full case study to learn why Guac calls Upsolve “a game-changer for AI teams.” |

How Did We Evaluate the Best Observability and Monitoring Tools

We evaluated each LLM observability tool based on criteria that matter in real production environments, not demos or isolated experiments.

Our assessment focused on:

Ease of monitoring and usability: How simple it is to monitor LLM behavior while still offering powerful features for advanced use cases.

Performance, error, and cost visibility: How effectively the tool helps track AI performance, detect errors, and control usage and costs.

Scalability and production readiness: Whether the platform can scale with growing workloads and integrate smoothly into existing workflows.

Security and compliance support: Availability of governance, access control, and compliance features for sensitive or regulated environments.

Actionable insights and debugging: How easily teams can investigate issues and take action without digging through raw data.

Each tool was evaluated using the same criteria, which is why the best choice depends on your team’s technical needs, scale, and production requirements.

Quick Comparison for Best LLM Observability Tools

Tool | Key Features | Best For | Pricing Details |

Upsolve | Real-time monitoring, cost optimization, safety alerts, scalable dashboards | Enterprises needing full observability and performance management | Growth Plan: From $1,000/month • Professional Plan: From $2,000/month • Enterprise: Custom pricing |

Arize AI | Model performance tracking, drift monitoring, bias detection, analytics alerts | Large teams deploying models at scale | Arize Phoenix: Free & Open source • AX Free: $0/mo • AX Pro: $50/mo • AX Enterprise: Custom |

Weights & Biases (W&B) | Experiment tracking, versioning, collaboration tools, dashboards | Data science teams focused on model development | Free: $0/mo • Pro: $60+/mo per user • Enterprise: Custom |

LangSmith | SDK integration, prompt debugging, version control, root cause analysis | Developers integrating observability into pipelines | Developer: Free • Plus: $39/month per seat • Enterprise: Custom pricing |

Fiddler AI | Explainability workflows, bias attribution, drift alerts, governance scorecards | Enterprises and regulated industries needing explainability, compliance, and monitoring | Free • Developer: $0.002 per trace • Enterprise: Custom pricing |

Here’s a detailed look at the top LLM observability platforms:

1. Upsolve – Best Balanced, All-in-One

Upsolve is an end-to-end LLM agent observability platform designed for enterprises that need to scale AI responsibly without compromising on reliability, compliance, or performance.

What are the Key LLM Observability & Monitoring Features?

Real-time monitoring helps track AI performance instantly for quick issue detection.

Compliance-ready dashboards ensure outputs meet industry regulations and audit requirements.

Multilingual support allows global teams to monitor AI in multiple languages.

Cost optimization tools help reduce cloud expenses while scaling AI operations.

What is the Pricing?

Growth Plan: From $1,000/month with dashboards, 50 tenants, and core analytics.

Professional Plan: From $2,000/month with unlimited dashboards, AI analytics, and support.

Enterprise Plan: Custom pricing with full access, compliance, and 24/7 support.

Pros

Responsive and quick support helps resolve questions without delays.

Straightforward analytics explain product benefits clearly to teams and customers.

Fast insights with high-quality visualizations ready to share instantly.

Cons

Limited theming options, though APIs provide workarounds.

Learning curve for customization, but documentation and support are helpful.

Initial setup needs coordination, though it’s manageable with proper planning.

Best For:

Organizations need a balanced solution that delivers compliance, monitoring, and cost optimization without overwhelming technical complexity.

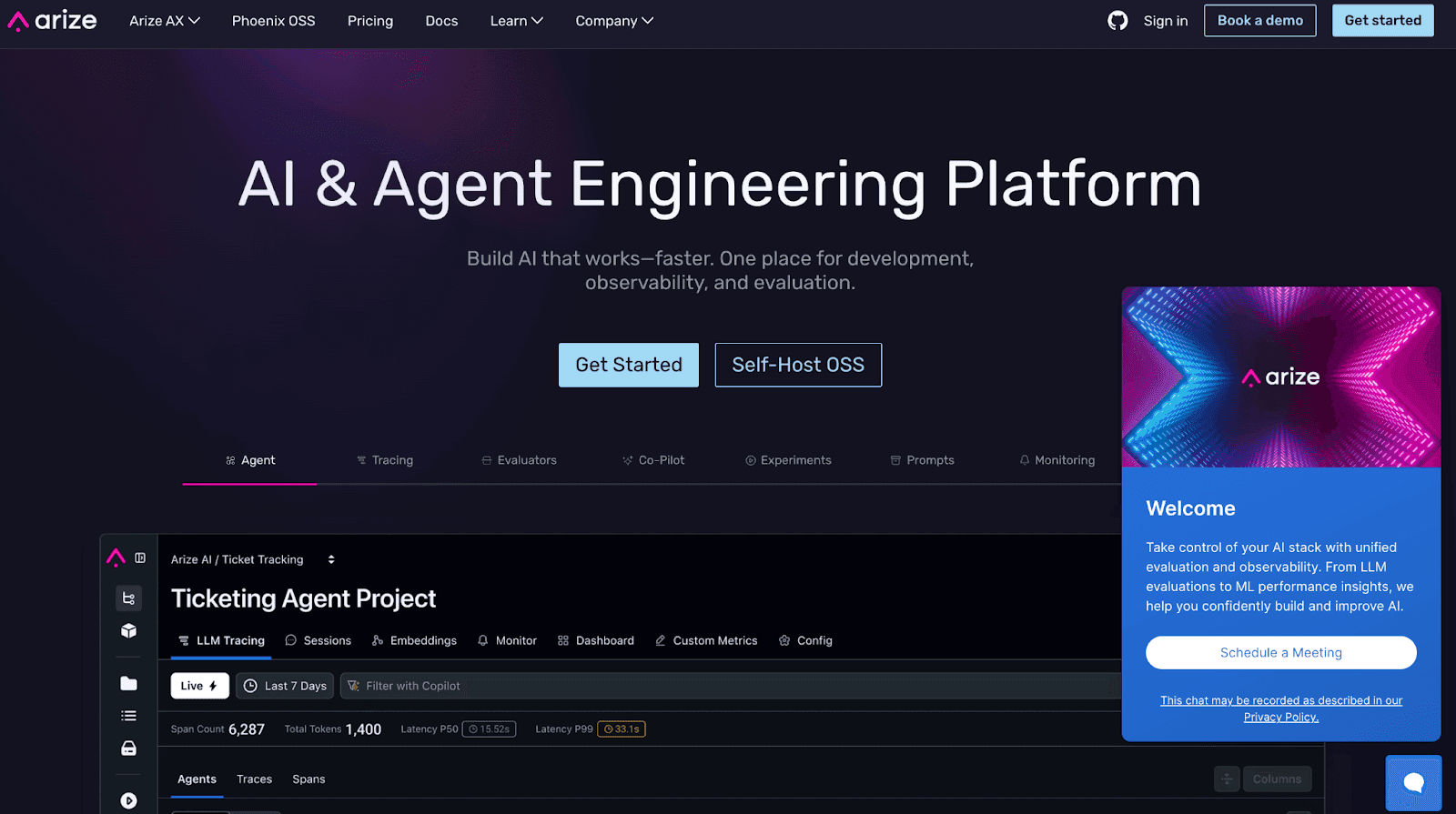

2. Arize AI – Best for Model Performance Insights

Arize AI offers a model observability platform that focuses on understanding and improving machine learning performance across production environments.

What are the Key LLM Observability & Monitoring Features?

Performance tracking helps teams spot accuracy drops and make timely corrections.

Bias detection identifies fairness issues to ensure equitable model outcomes.

Drift monitoring alerts teams when data changes risk reducing model reliability.

Root cause analysis allows users to understand why models fail or underperform.

What is the Pricing?

Arize Phoenix - Free & Open source: Unlimited users, monitoring and troubleshooting machine learning models.

AX Free – $0 /mo: Basic monitoring for developers with 25k spans/month and 7-day data retention.

AX Pro – $50 /mo: For startups and small teams, offering 100k spans/month, 15-day retention, and 3 users.

AX Enterprise – Custom: Full observability with unlimited spans, advanced integrations, compliance, and dedicated support.

Pros:

Actionable insights into model drift and data issues enhance proactive monitoring.

Intuitive interface simplifies tracking and understanding model health.

Seamless integration with various ML frameworks streamlines setup.

Cons:

Limited customization in dashboards may not meet all user needs.

Learning curves for advanced features could require additional training.

Performance issues reported with large-scale deployments.

Best For:

Organizations that prioritize deep model performance insights and data-driven improvements at scale.

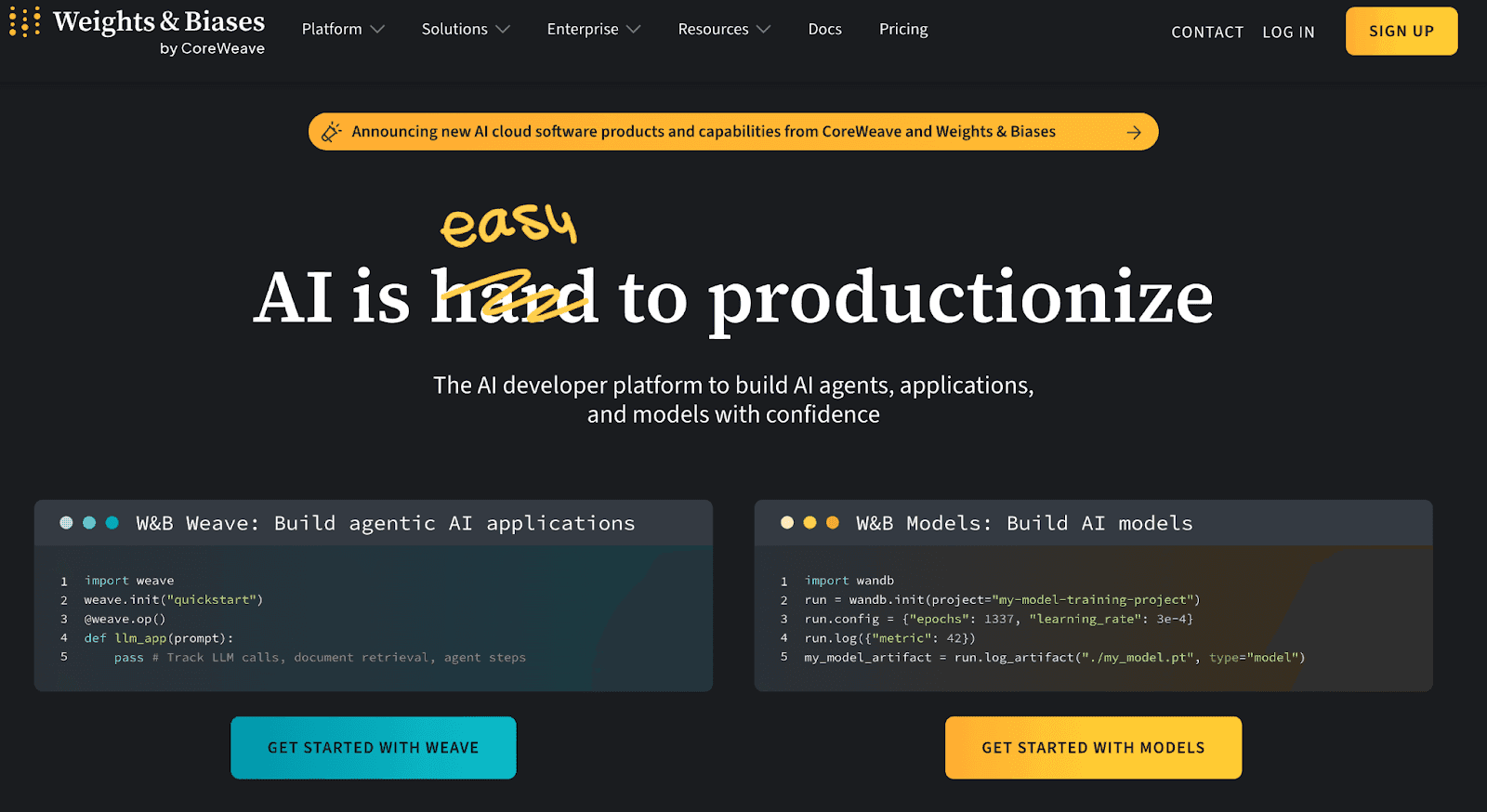

3. Weights & Biases (W&B) – Best for Experiment Tracking

Weights & Biases is built for teams that need detailed tracking, comparison, and reproducibility across ML experiments.

What are the Key LLM Observability & Monitoring Features?

Experiment logging records hyperparameters and metrics for easy model comparison.

Collaboration tools enable real-time sharing across distributed teams.

Artifact versioning tracks datasets and models to prevent duplication.

Framework integrations connect seamlessly with PyTorch, TensorFlow, and others.

What is the Pricing?

Cloud-Hosted Pricing

Free – $0/mo

Core experiment tracking for individuals and small teams.

Versioning, metrics visualization, and community support included.

Pro – $60+/mo per user

Collaboration-focused tools for growing teams.

Unlimited projects, notifications, CI/CD integration, and role-based access.

Enterprise – Custom

Advanced observability with compliance and security features.

Single-tenant deployment, HIPAA compliance, private networking, and priority support.

Pricing for Privately Hosted:

Personal – $0/mo

Core experiment tracking for individuals.

Registry and lineage tracking included.

Run a W&B server locally on any machine with Docker and Python installed.

For personal projects only. Corporate use is not allowed.

Advanced Enterprise – Custom

Flexible deployment and privacy controls for large organizations.

HIPAA compliance, private networking, and customer-managed encryption included.

Single Sign On, audit logs, and enterprise support with custom roles.

Run a W&B server locally on your own infrastructure with a free enterprise trial license.

Pros:

User-friendly interface facilitates easy experiment tracking.

Robust visualization tools aid in comparing model runs effectively.

Wide integration support enhances compatibility with various ML frameworks.

Cons:

Performance issues observed, especially with large datasets.

Documentation gaps may necessitate external resources for troubleshooting.

Limited offline capabilities could hinder usage in restricted environments.

Best For:

Teams that need structured experiment tracking and reproducibility for research and development workflows.

$42M Backed Arthur Chose Upsolve — Here’s Why

Arthur embeds BI for model & LLM observability and monitoring at Fortune 100 companies | Upsolve AI

4. LangSmith – Best for Debugging and Improving LLM Workflows

LangSmith is tailored for teams that need fine-grained debugging and observability tools to refine AI-driven workflows efficiently.

What are the Key LLM Observability & Monitoring Features?

Trace visualizations clarify how requests and responses are connected.

Prompt debugging helps identify issues to reduce hallucinations and inconsistencies.

Error monitoring spots failed outputs for faster troubleshooting.

Usage analytics helps teams optimize resource allocation and cost.

What is the Pricing?

Developer – Free

Perfect for individual developers and hobby projects.

Includes 5,000 base traces per month, access to tracing and monitoring tools, and prompt improvement features.

Plus – $39/month per seat

Best for small teams building reliable AI agents.

Offers up to 10 seats, 10,000 base traces per month, higher rate limits, and email support for troubleshooting.

Enterprise – Custom Pricing

Tailored for large organizations with advanced deployment needs.

Provides flexible cloud or self-hosted options, SSO, role-based access control, dedicated engineering support, and SLAs.

Pros:

Comprehensive debugging tools assist in identifying and resolving issues.

Detailed trace views provide clarity on model interactions.

Resource-aware analytics aid in optimizing performance and costs.

Cons:

Technical setup may require onboarding and training.

Limited offline functionality for teams in constrained environments.

Scaling to enterprise levels may need additional infrastructure planning.

Best For:

Organizations focused on debugging and refining AI workflows with performance and cost insights.

Fiddler AI

Fiddler AI helps teams build trust in AI by making models explainable, transparent, and aligned with regulations.

What are the Key LLM Observability & Monitoring Features?

Explainability Workflows: Walks users through interpreting model predictions with practical, step-by-step guidance.

Bias Attribution Engine: Identifies data and feature sources of bias, helping teams address fairness issues.

Adaptive Drift Alerts: Learns from patterns to highlight real, actionable deviations in model performance.

Governance Scorecards: Offers live dashboards that track compliance, fairness, and overall model health.

Scenario Simulation Studio: Lets teams test input changes and see their effects before deploying models.

Federated Learning Support: Facilitates privacy-preserving, distributed model training across locations.

What is the Pricing?

Free

Real-time guardrails to detect harmful exposure

Protections against hallucinations, toxicity, PII/PHI, prompt injection, and jailbreak attempts

Latency of less than 100 ms

Powered by contextual and task-specific Fiddler Trust Models

Developer – $0.002 per trace

Everything in Free, plus:

Unified AI observability, including tests and experiments, for agentic and predictive systems

Custom evaluators and bring your own judge

Visualization-driven insights

Role-based access control and SSO

SaaS deployment

Enterprise – Custom Pricing

Everything in Developer, plus:

Enterprise-grade guardrails

Enterprise-grade infrastructure scalability

Flexible deployment options (SaaS, VPC, or on-premise)

White glove support with dedicated communication channels

Named Customer Success Manager and customized onboarding

Best For:

Enterprises in compliance-heavy sectors aiming for transparent, fair, and explainable AI-driven decision-making.

Pros

Explainability Support: Users appreciate the detailed workflows that help interpret AI model predictions.

Compliance Tools: Strong regulatory tracking and bias checks assist teams in meeting industry requirements.

Performance Insights: Adaptive alerts and scenario testing help maintain model accuracy and robustness.

Cons

Usability Challenges: Beginners struggle with navigation due to limited tutorials and onboarding guidance.

No Free Trial: Lack of a limited-feature version makes it harder for new users to explore before committing.

Best For: Enterprises and regulated industries needing AI explainability, compliance, and real-time model monitoring.

5 Best Power BI Embedded Analytics Alternatives & Competitors

Who Should and Shouldn’t Use LLM Observability & Monitoring Tools

Who Should Use Them

Enterprises scaling AI to monitor performance, control costs, and ensure compliance.

Data science teams need deeper insights and faster debugging.

Regulated industries like healthcare and finance require strict compliance.

Startups experimenting with AI that want to catch issues early.

Who Shouldn’t Use Them

Businesses with simple AI needs that don’t require advanced monitoring.

Small teams on tight budgets where enterprise tools are overkill.

Projects without real-time or compliance demands that don’t need complex setups.

Conclusion

LLM observability is no longer optional for teams running AI systems in production. As usage scales, visibility into model behavior, costs, performance, and risk becomes critical for maintaining reliability and trust.

Each tool covered in this guide addresses a different part of the observability problem. Some focus on deep model analysis and experimentation, others prioritize debugging, governance, or developer workflows. The right choice depends on your team’s scale, technical maturity, and operational priorities.

Upsolve stands out for teams that need a unified, production-ready observability layer that turns AI telemetry into actionable insights across stakeholders. By combining monitoring, cost visibility, and role-based dashboards, it helps organizations move from reactive troubleshooting to proactive AI operations.

Ultimately, the best LLM observability tool is the one that fits seamlessly into your workflows and helps your team act on issues before they impact users, costs, or compliance.

FAQs

What’s the difference between ML observability and LLM observability?

ML observability tracks traditional models like fraud detection or recommendation systems, while LLM observability focuses on generative AI’s unique risks, hallucinations, bias, cost inefficiencies, and compliance challenges.

Do small teams need LLM observability tools?

Yes, even small teams benefit to control costs, catch errors early, and ensure safe outputs. Platforms like Upsolve scale well for teams of all sizes.

How fast can companies see results?

With proper integration, results are often visible within weeks, especially through real-time dashboards and actionable alerts.

Are these tools compliant with regulations?

Leading LLM observability tools provide built-in compliance features including GDPR, HIPAA, SOC2, helping organizations meet regulatory requirements effortlessly.

Can observability tools also improve prompts and models?

Yes, feedback loops from observability platforms help refine prompts, retrain models, and continuously optimize performance.

Are LLM observability tools really used in startups and companies?

Yes. Startups and enterprises use LLM observability tools to ensure AI stays reliable, efficient, and compliant.

Try Upsolve for Embedded Dashboards & AI Insights

Embed dashboards and AI insights directly into your product, with no heavy engineering required.

Fast setup

Built for SaaS products

30‑day free trial